Introduction to Apache Spark

Spark is a general distributed data processing engine built for speed, ease of use, and flexibility. The combination of these three properties is what makes Spark so popular and widely adopted in the industry.

In terms of flexibility, Spark offers a single unified data processing stack that can be used to solve multiple types of data processing workloads, including batch processing, interactive queries, iterative processing needed by machine learning algorithms, and real-time streaming processing to extract actionable insights at near real-time.

A big data ecosystem consists of many pieces of technology including a distributed storage engine called HDFS, a cluster management system to efficiently manage a cluster of machines, and different file formats to store a large amount of data efficiently in binary and columnar format. Spark integrates really well with the big data ecosystem. This is another reason why Spark adoption has been growing at a really fast pace.Another really cool thing about Spark is it is open source; therefore, anyone can download the source code to examine the code, to figure out how a certain feature was implemented, or to extend its functionalities. In some cases, it can dramatically help with reducing the time to debug problems.

Spark core concept and architecture

The spark core architecture includes.

- Spark clusters

- The resource management system

- Spark applications

- Spark drivers

- Spark executors

Spark Cluster and resource management system

Spark is essentially a distributed system that was designed to process large volume of data efficiently and quickly. This distributed system is typically deployed onto a collection of machines, which is known as a Spark cluster. A cluster size can be as small as a few machines or as large as thousands of machines.

To efficiently and intelligently manage a collection of machines, companies rely on a resource management system such as Apache YARN or Apache Mesos. We can see the master slave features here in the design. The two main components in a typical resource management system are the cluster manager (master) and the worker (slave). The cluster manager knows where the workers are located, how much memory they have, and the number of CPU cores each one has. One of the main responsibilities of the cluster manager is to orchestrate the work by assigning it to each worker. Each worker offers resources (memory, CPU, etc.) to the cluster manager and performs the assigned work.

Spark Application

A Spark application consists of two parts. The first is the application data processing logic expressed using Spark APIs, and the other is the Spark driver. The application data processing logic can be as simple as a few lines of code to perform a few data processing operations or can be as complex as training a large machine learning model that requires many iterations and could run for many hours to complete. The Spark driveris the central coordinator of a Spark application, and it interacts with a cluster manager to figure out which machines to run the data processing logic on. For each one of those machines, the Spark driver requests that the cluster manager launch a process called the Spark executor. Another important job of the Spark driver is to manage and distribute Spark tasks onto each executor on behalf of the application. If the data processing logic requires the Spark driver to display the computed results to a user, then it will coordinate with each Spark executor to collect the computed result and merge them together. The entry point into a Spark application is through a class called SparkSession, which provides facilities for setting up configurations as well as APIs for expressing data processing logic.

Spark driver and executor

Each Spark executor is a JVM process and is exclusively allocated to a specific Spark application. This was a conscious design decision to avoid sharing a Spark executor between multiple Spark applications in order to isolate them from each other so one badly behaving Spark application wouldn’t affect other Spark applications. The lifetime of a Spark executor is the duration of a Spark application, which could run for a few minutes or for a few days. Since Spark applications are running in separate Spark executors, sharing data between them will require writing the data to an external storage system like HDFS.As depicted in above figure, Spark employs a master-slave architecture, where the Spark driver is the master and the Spark executor is the slave. Each of these components runs as an independent process on a Spark cluster. A Spark application consists of one and only one Spark driver and one or more Spark executors. Playing the slave role, each Spark executor does what it is told, which is to execute the data processing logic in the form of tasks. Each task is executed on a separate CPU core. This is how Spark can speed up the processing of a large amount of data by processing it in parallel. In addition to executing assigned tasks, each Spark executor has the responsibility of caching a portion of the data in memory and/or on disk when it is told to do so by the application logic.

At the time of launching a Spark application, you can request how many Spark executors an application needs and how much memory and the number of CPU cores each executor should have. Figuring out an appropriate number of Spark executors, the amount of memory, and the number of CPU requires some understanding of the amount of data that will be processed, the complexity of the data processing logic, and the desired duration by which a Spark application should complete the processing logic.

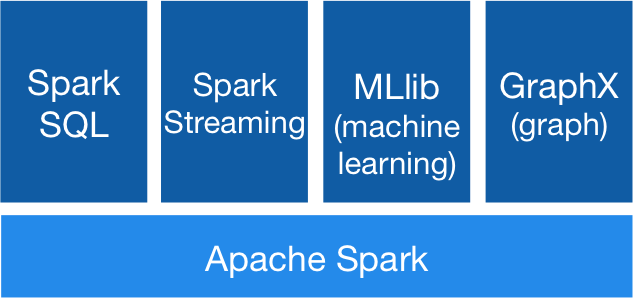

Apache spark ecosystem components

Apache Spark guarantee for quicker information handling and also simpler advancement is conceivable only because of Apache Spark Components. All of them settled the issues that happened while utilizing Hadoop MapReduce. Now, let's talk about each Spark Ecosystem Component one by one -

1. Apache Spark Core

Spark Core is the basic general execution engine for the Spark platform that all other functionalities are based upon. It gives In-Memory registering and connected datasets in external storage frameworks.

2. Spark SQL

Spark SQL is a segment over Spark Core that presents another information abstraction called SchemaRDD, which offers help for syncing structured and unstructured information.

3. Spark Streaming

Spark Streaming use Spark Core's quick scheduling ability to perform Streaming Analytics. It ingests information in scaled-down clusters and performs RDD (Resilient Distributed Datasets) changes on those small-scale groups of information.

4. MLlib (Machine Learning Library)

MLlib is a Distributed Machine Learning structure above Spark in view of the distributed memory-based Spark architecture. It is, as indicated by benchmarks, done by the MLlib engineers against the Alternating Least Squares (ALS) executions. Spark MLlib is nine times as rapid as the Hadoop disk version of Apache Mahout (before Mahout picked up a Spark interface).

5. GraphX

GraphX is a distributed Graph-Processing framework of Spark. It gives an API for communicating chart calculation that can display the client characterized diagrams by utilizing Pregel abstraction API. It likewise gives an optimized and improved runtime to this abstraction.

6. Spark R

Essentially, to utilize Apache Spark from R. It is R bundle that gives light-weight frontend. In addition, it enables information researchers to break down expansive datasets. Likewise permits running employments intuitively on them from the R shell. In spite of the fact that, the main thought behind SparkR was to investigate diverse methods to incorporate the ease of use of R with the adaptability of Spark.